It has been roughly 30 years since research on virtual reality commenced fully. Meanwhile, it has been merely five years since the senses of sight and hearing were first used for virtual reality and became familiar to us. Virtual reality technology has only recently begun to be applied to various fields. The key to a breakthrough is incorporating the sense of touch into previously visual-and-sound-only interfaces. Here we introduce the most advanced haptics research at the Graduate School of Frontier Sciences.

(The interview and the original Japanese text: Kazumasa Furui)

- VR Research Is Advancing: From Just Seeing and Hearing to Touching

- Mid-Air Haptics by "Airborne Ultrasound"

- What Should We Expect from Combining VR and AI?

- New Technologies and Applications of VR

- The Future of VR Research at the GSFS

1. VR Research Is Advancing: From Just Seeing and Hearing to Touching

The COVID-19 pandemic limited our face-to-face communications, which initiated the need for online classes, meetings, and telework. Virtual reality (VR) is now gaining attention once more as a supporting technology for remote communications in the new lifestyle. VR started to become popular around 2016 due to the advancement of performance and cost reduction of VR head-mounted displays.

Headsets are becoming familiar to us, especially for entertainment purposes. However, as three-dimensional image technology advances, we become increasingly aware that something is missing in VR experiences. This is probably because the only senses used for VR are sight and hearing. If the sense of touch is used, you will be able to remotely touch and manipulate a virtual object floating in the air. Tactile technologies that respond to such expectations of ours are being developed one after another in the VR research field. VR research is now proceeding to the next phase.

2. Mid-Air Haptics by “Airborne Ultrasound”

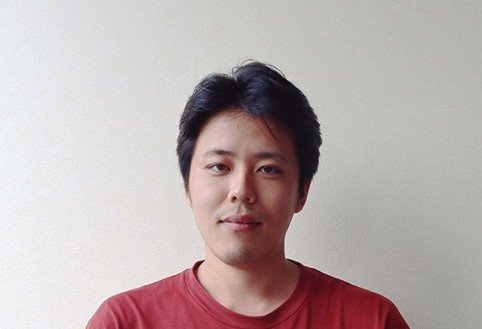

Currently, haptics is at the forefront of VR research. Professor SHINODA Hiroyuki is the one who leads the mid-air haptics research using airborne ultrasound to generate tactile sensations.

SHINODA Hiroyuki

Professor

Department of Complexity Science and Engineering

Generating various touch sensations without a touch

VR tactile sensations can be experienced in various ways. The major method is to use VR gadgets, which include wearing special gloves or holding a vibrating controller. However, Professor Shinoda challenges generating the sense of touch by ultrasound without touching any physical objects.

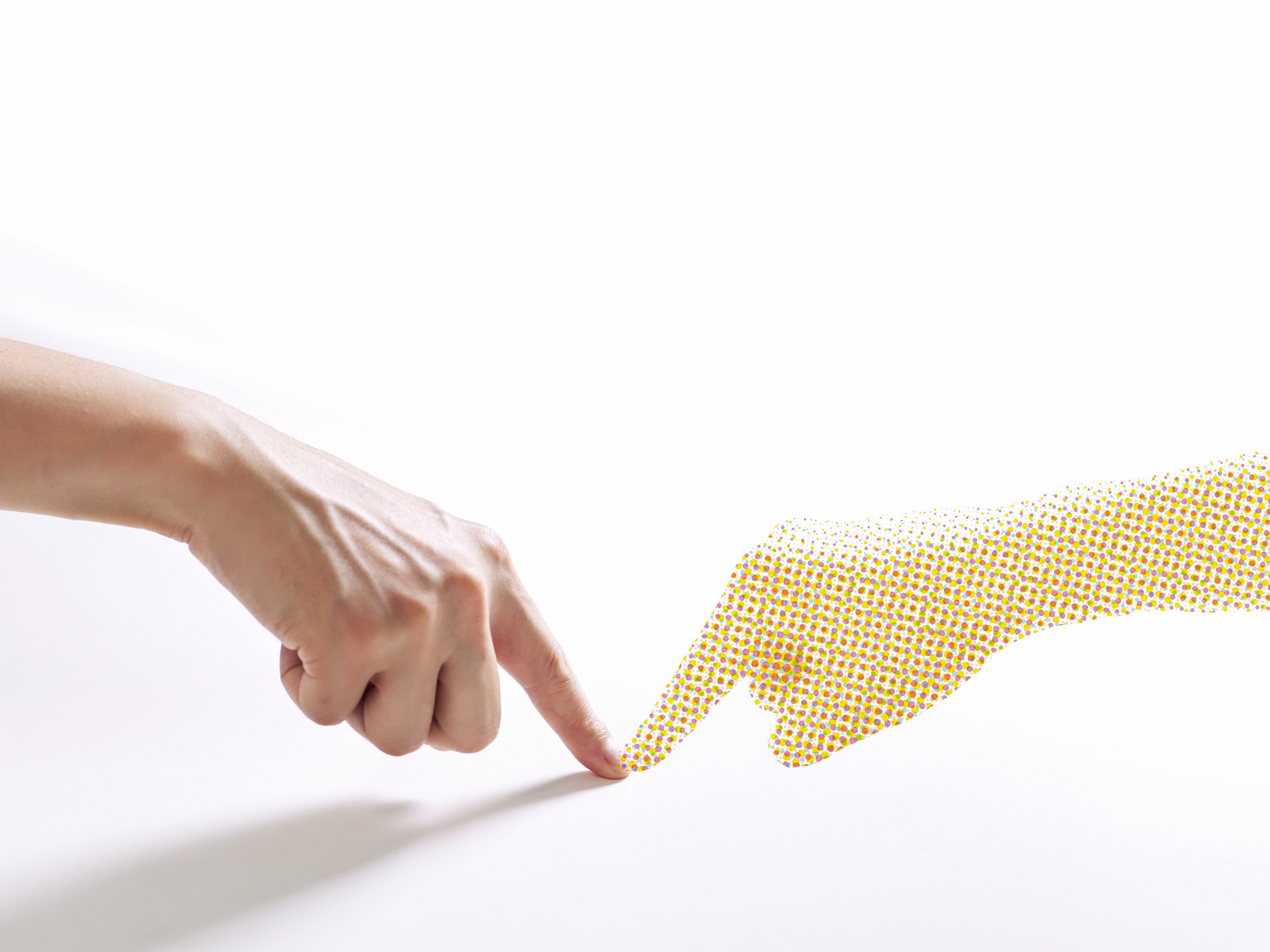

In 2008, his research group published that they succeeded in generating tactile sensations by mid-air ultrasound for the first time in the world. After demonstrating this new haptic technology with synchronized mid-air virtual images, which was also the world’s first demonstration of its kind, and validating the effects of synchronization of mid-air virtual images and the reproduced sense of touch, they finally reached the start of generating tactile sensations of various physical objects.

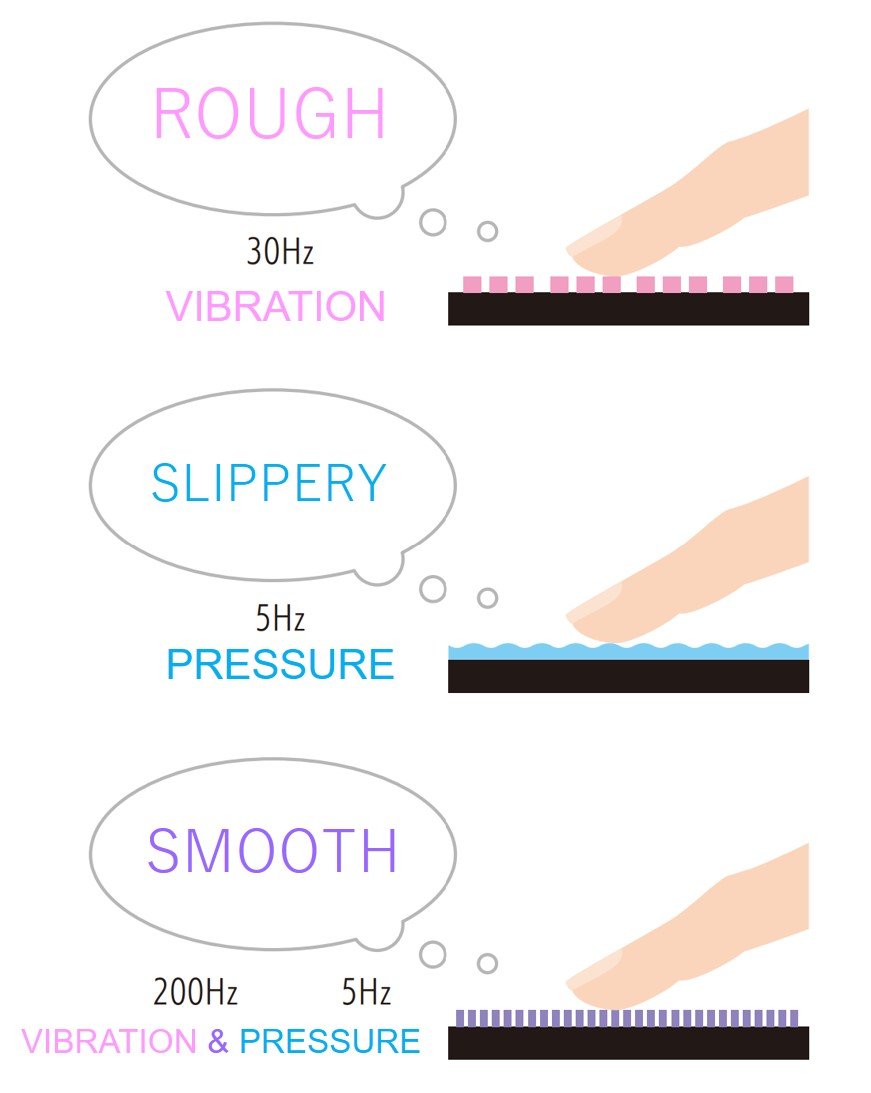

“Generating the sense of sight and hearing is easy by recording actual views or sounds but generating small-sized pressure dots on the skin is still difficult,” Professor Shinoda explains. “The tactile mechanism is merely discovered partially. But despite the conditions, we have succeeded in producing several types of tactile sensations on fingertips, such as smoothness, slipperiness, and roughness by mid-air ultrasound.”

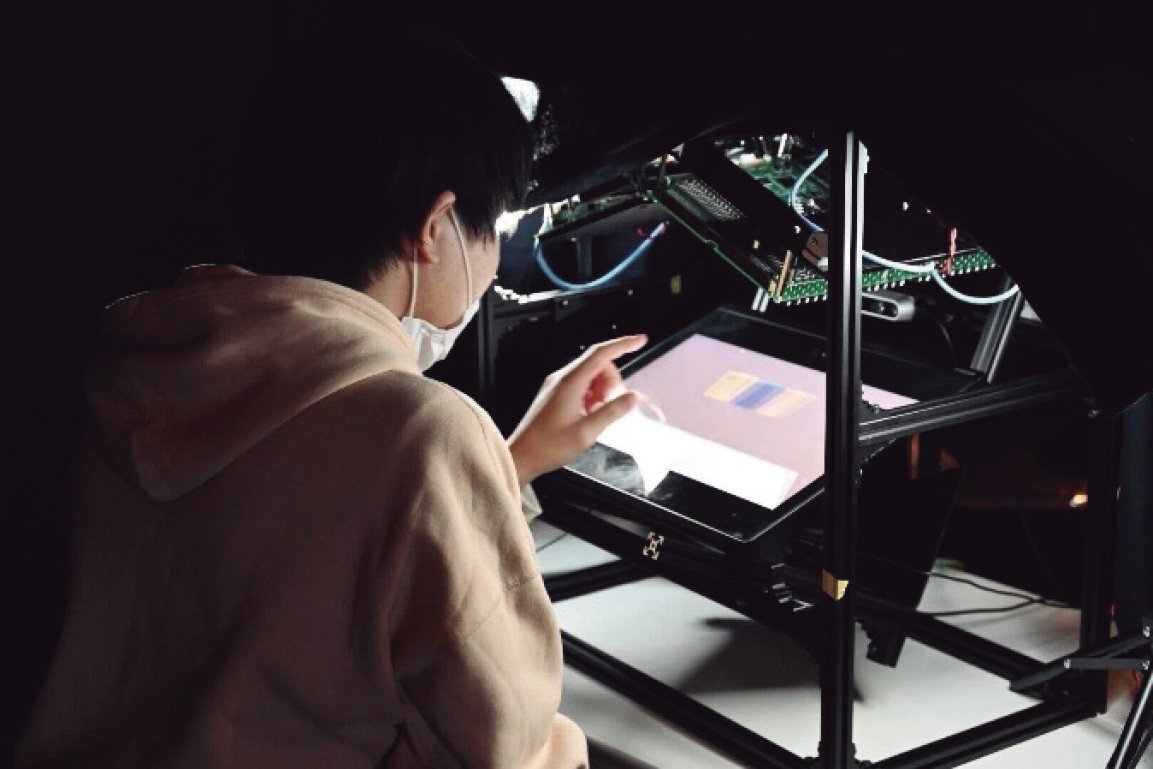

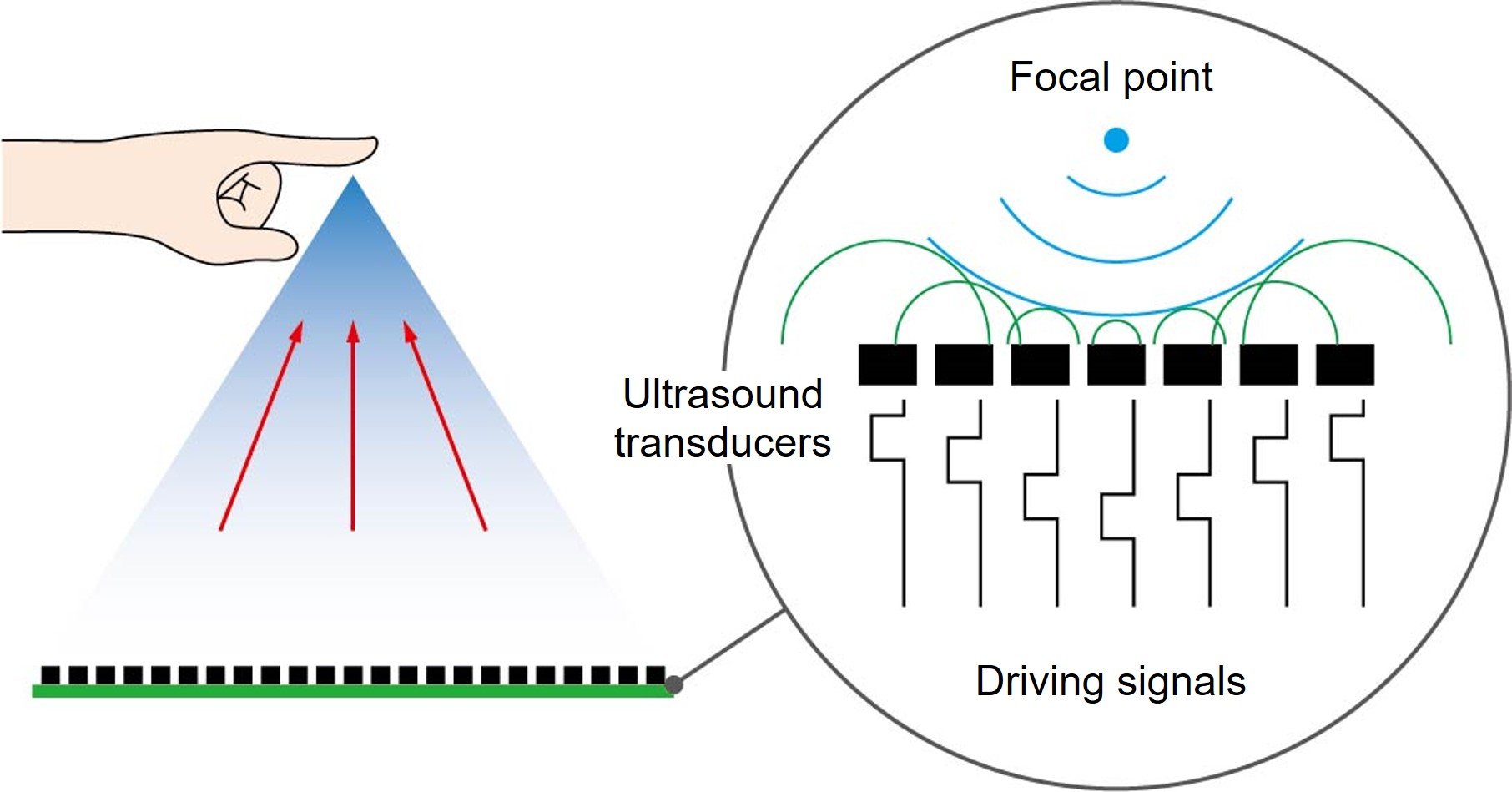

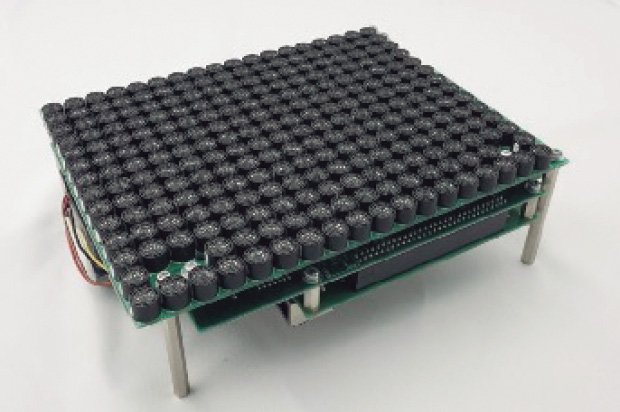

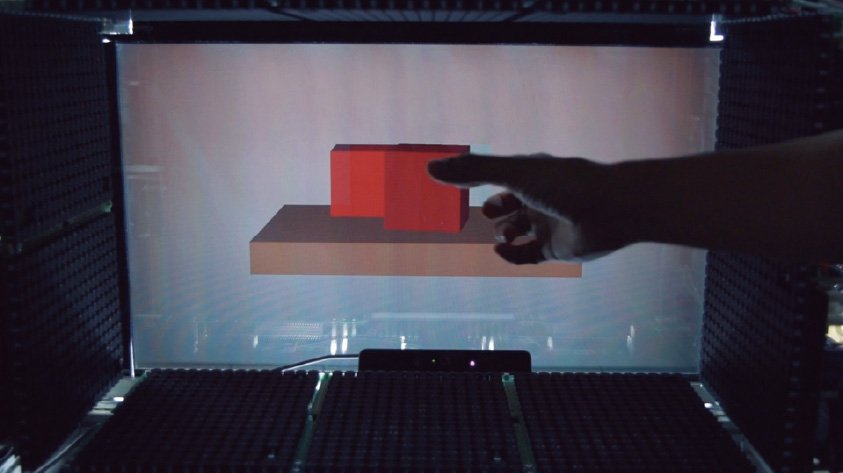

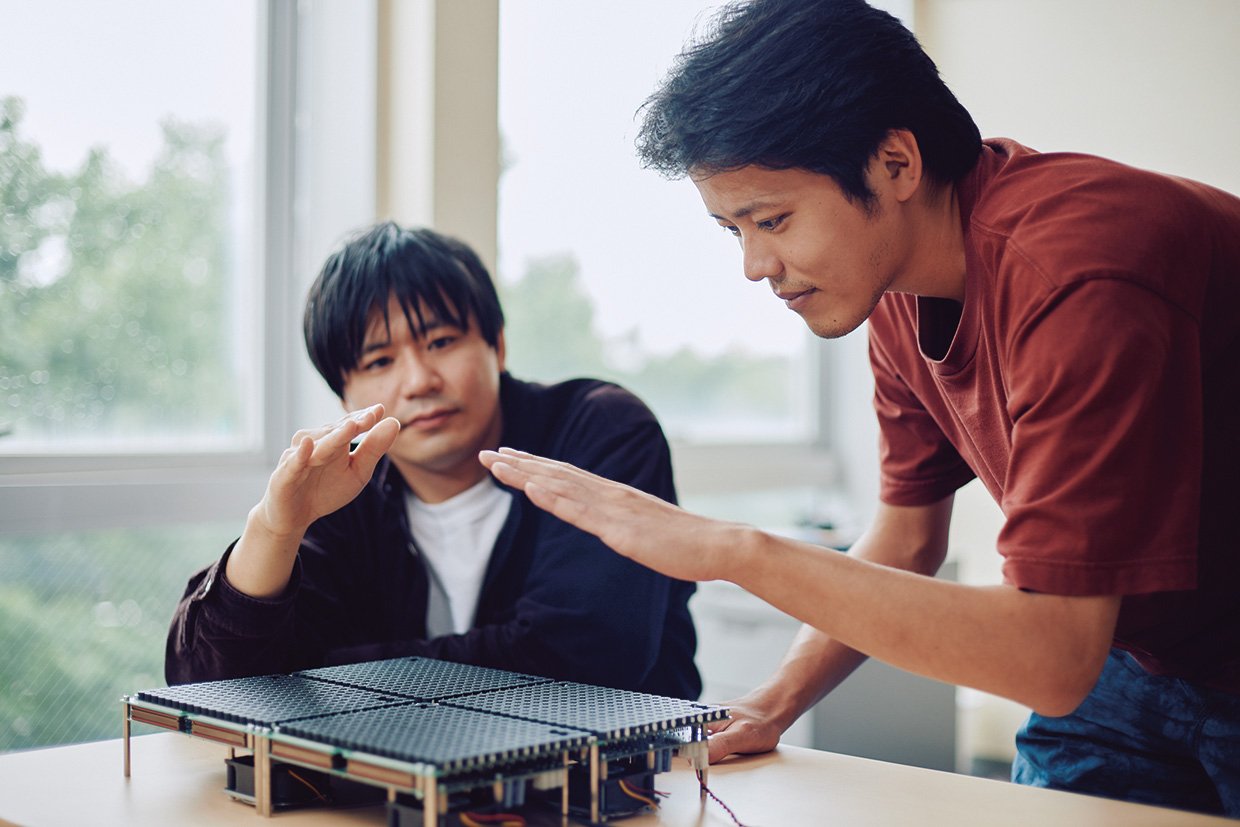

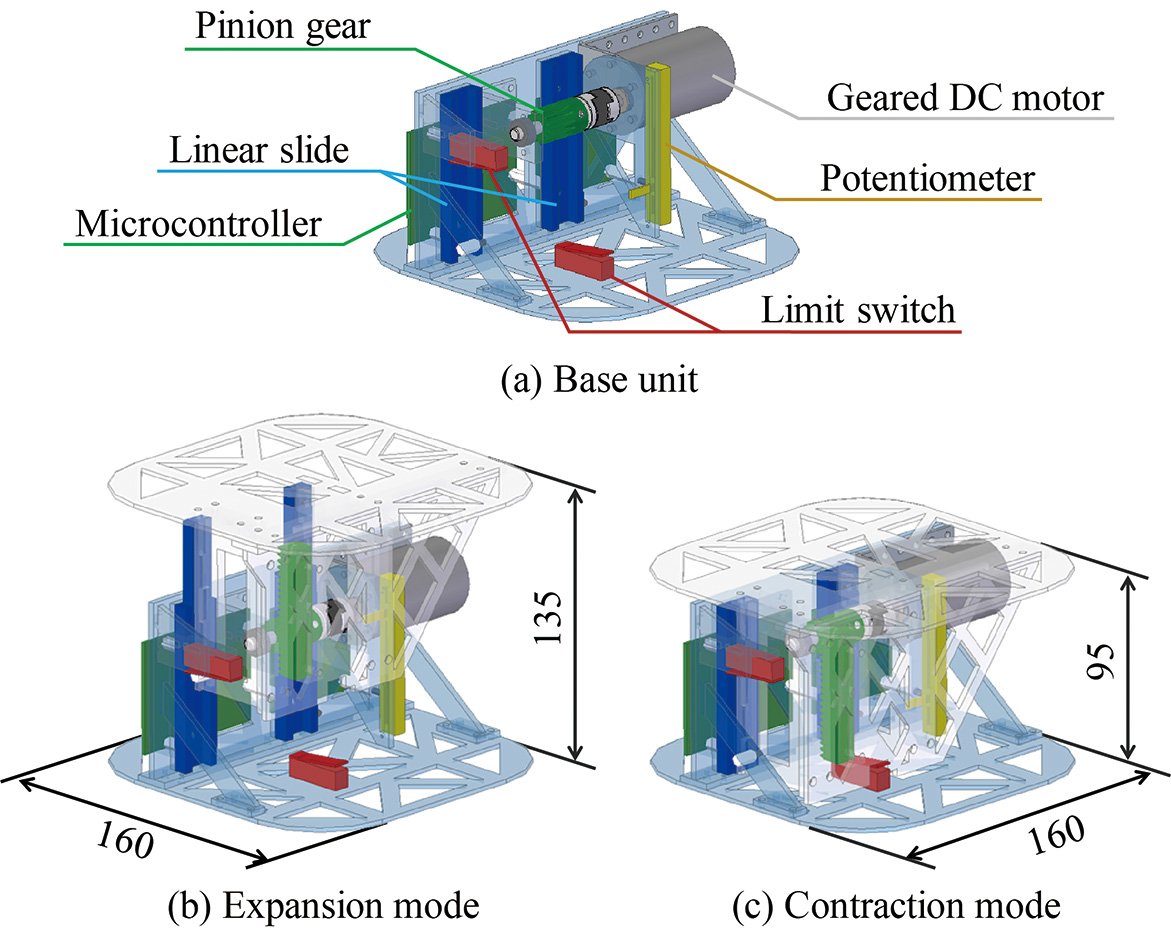

The device called “the airborne ultrasound tactile display (AUTD) is responsible for producing these touches.” It is a device on which over 1,000 ultrasound-emitting transducers are arrayed. It synthesizes the drive timings and output intensity of all transducers with an accuracy of one microsecond or less. Complex pressure patterns can be produced mid-air by the interference of ultrasound waveforms output by the AUTD. The position sensor spots and tracks the positions of the user’s fingertips, allowing the user to experience a variety of touch sensations in relation to the 3D images on display. Despite its limitations, this is a breakthrough in haptics technology development; making it possible to freely distribute spatiotemporal pressures within a certain range on the skin, rather than just at a single spot.

An airborne ultrasound tactile display (AUTD).

An image of generating pressure sensations by the AUTD.

The keys to producing a sensation of pressure are accurate synchronization and position change of the focal point

Various technologies are employed on the AUTD. One of them is to produce a sensation of pressure. Similar to the three primary colors of light, it is believed that we have three types of tactile receptors on our fingertips that react to high- and low-frequency vibrations and pressure stimuli, resulting in various tactile sensations. Ultrasound had been successful in producing high- and low-frequency vibratory sensations but generating pressure sensations was difficult.

However, Tao Morisaki, a third-year doctoral student, discovered a method. “I found that if you slowly make a circle with an ultrasound focal point under 5 Hz, you can generate a sensation similar to a pressure sensation,” Morisaki explains. “This technique enabled us to generate even more diverse tactile sensations using mid-air haptics.”

The other key is the technique for synthesizing multiple numbers of ultrasound transducers on the AUTD with high accuracy. “When you see an image on a visual display, if the light-emitting devices synchronize in 30 or 16 milliseconds, you don’t perceive a lag,” says Shun Suzuki, a Project Research Associate at Shinoda’s laboratory. “But when it comes to the AUTD, synchronization under one microsecond is required to feel the tactile sensation naturally. Finally, we realized this using the technique of “the field bus system,” which is used for robotics control or operation in factories.”

Tactile sensations, according to the types of the tactile receptors.

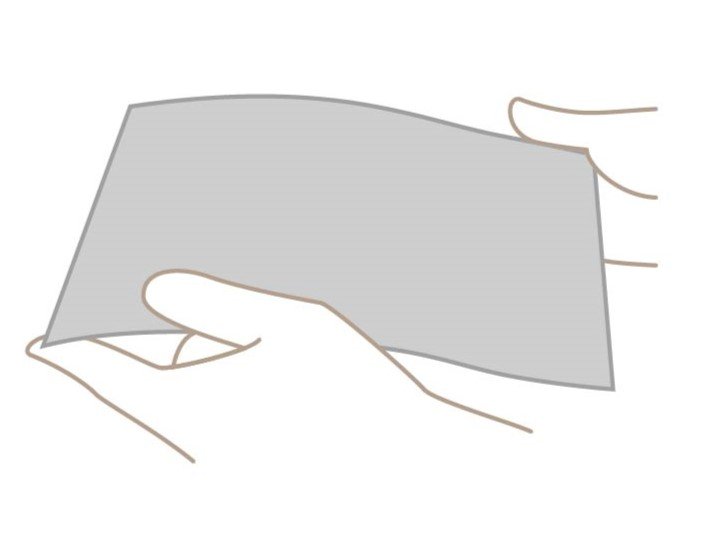

Our Next Challenges Are Developing a Transparent Sheet-Type Device and Generating 3D Tactile Sensations

The next challenges Professor Shinoda and his group are currently tackling are developing a transparent sheet-type AUTD and generating 3D tactile sensations. They have already started working on making the AUTD thinner. “The ultrasound transducers on the AUTD are thick and bulky. We are trying to develop a sheet-type device that is more highly effective, highly powered, and low-cost. Ultimately, we want to make it transparent,” says Takaaki Kamigaki, another Project Research Associate at the laboratory. Moreover, they are trying to generate tactile sensations of grabbing and manipulating 3D virtual objects as if they were real.

“We are trying to find how we can generate the tactile sensations of grabbing and manipulating a virtual object on the palm and how we can produce the tactile sensations of perceiving the hardness, the contour, and the edges of an object like the sensations we feel when we touch a physical object. We can generate the sensation of grabbing and manipulating a cottony thing now, but the research is still in its infancy.” Atsushi Matsubayashi, a Project Research Associate, explains the current progress of their research.

As the application of haptics research by ultrasound to practical use has only recently started, further progress can be highly expected.

A single panel of the phased array. It emits ultrasound.

An image of a sheet-type ultrasound device.

Generating the tactile sensation of grabbing an object has just started.

Can the sensations be produced by combining vibration and pressure by ultrasound?

POSSIBILITIES

- You can feel the fabric texture remotely.

- You can touch a virtual object and manipulate it.

- You can communicate with people as if real without risks such as infection.

3. What Should We Expect from Combining VR and AI?

VR studies can greatly advance once combined with AI, including haptics. Therefore, the Graduate School of Frontier Sciences promotes collaborative research projects on VR and AI.

Action Prediction by Machine Learning

Associate Professor Yasutoshi Makino at Shinoda and Makino Lab. works on a prediction system of human actions. “Several years ago, I created a system that can predict the action in the next 0.5 s. The system detects human motions (precisely, movements of 25 joints of the body) by depth camera and puts its data into a five-layer computer neural network to predict a person’s next motion and give a moving suggestion to him/her. For walking, it can predict the next motion simultaneously, with an error of approximately three centimeters, at the trunk of the user’s body,” says Makino.

They recently developed a robot with this system that moves smoothly with a walker. The system can be applied to strollers or shopping carts. Makino continues, “VR studies deal with the physicality of the whole human body. As AI is becoming convenient as a tool, we can expect more development of VR combining with AI in various fields, such as robotics.”

The robot accompanies a walker predicting his next motion in 0.5 s.

MAKINO Yasutoshi

Associate Professor

Department of Complexity Science and Engineering

POSSIBILITIES

- Reducing delays of transmitting movement information in remote communication systems.

- Improving people’s sports skills and preventing accidents at nursing cares.

- Improving physical ability (Ex. Both people with and without disability can play the same sports together.).

Weakly Supervised Learning

Professor Masashi Sugiyama is a renowned researcher representing Japanese AI research and the Director of the RIKEN Center for Advanced Intelligence Project. He participates in the CREST project, the Japanese government’s funding program, led by Professor Shinoda. “I’m sure AI can enormously contribute to VR research,” says Sugiyama. “For the existing machine learning, collecting a large amount of training data with perfectly correct labels was a prerequisite. But you cannot always collect such an amount of data with perfectly correct labels. So how can machines learn from the limited amount of data or data with imperfect labels? This has been an ultimate question of machine learning.”

This problem is exactly what remains with the haptics study. It is difficult to collect ample training data on “an object X gives us Y (a certain type of tactile sensation),” meanwhile it is easy to collect data on “when we touch an object X, U (a certain type of pressure distribution pattern) we feel” and “U’ (a certain type of pressure distribution produced by ultrasound haptics) produces Y’ (a certain type of tactile sensation).” These respective data are called “weakly supervised data.” AI learns them based on the algorithm specially designed for the purpose, and “Y (a certain type of tactile sensation produced by mid-air haptics) is related to X (the specific object)” can be estimated from that learning.

Professor Sugiyama has been working on various theories and algorithms on versatile applicability, which is one of the most basic concept of AI. He received the 2022 Commendation for Science and Technology from the Japanese government for the research on “Weakly supervised learning” in the spring of 2022.

SUGIYAMA Masashi

Professor

Department of Complexity Science and Engineering

4. New Technologies and Applications of VR

In the Graduate School of Frontier Sciences, not only research on mid-air haptics but also other technologies research and applications of VR are conducted. We are introducing some of them.

Cross-Modal Phenomenon: Generating Tactile Sensations by Hallucination

VR devices, such as headsets, with which you can experience virtual reality with the senses of sight and hearing, have been improved. Currently, haptic technology that adds a sense of touch to VR devices is in the application phase. “For better quality VR experiences, cross-modal phenomena are essential,” says Project Lecturer Yuki Ban.

A cross-modal phenomenon is an interference phenomenon: interaction between naturally different sensory modalities, such as “sight and hearing” and “sight and taste.” There are two types of interference phenomena: quantitative and qualitative phenomena. Quantitative phenomena both augment and decrease sensations, while qualitative phenomena deviate or alter sensations. Applying these phenomena, real-like sensations can be generated without exactly the same physical quantity of the sensory stimuli as real ones.

Ban is currently working on combining the three senses: sight, hearing, and tactile; see the photo below. When the female character on the screen blows, you feel the air hit your ear as if she really blew out her breath.

He also conducts other experiments. One is to prove that you feel full when you eat a cookie while looking at an image of a bigger cookie than the actual cookie you are eating. Another is a creation of a device called “Relaxushion.” This cushion supports the user to breathe slowly in order to relax. “I want to make people feel better by effectively using human cognitive characteristics. I will continue to explore broader basic research for this goal,” says Ban.

Visual and hearing stimuli hallucinate the wind blowing not from the front but from the other direction.

VWind: You can feel a pseudobreath created by the warm temperature and vibration, along with the sound around your ear.

Relaxushion: The cushion inflates and deflates to guide the user to breathe steadily and deeply to relax.

BAN Yuki

Project Lecturer

Department of Human & Engineered Environmental Studies

POSSIBILITIES

- To make people be in a better condition.

- For example, to relax people and make them more productive.

Applying VR for Learning Mechanisms (Education with VR Technology)

VR has been applied mainly to entertainment gadgets, such as game controllers. However, VR should be applied in various settings, such as education, other industries, etc., from now on. Satori Hachisuka, a Project Lecturer of Innovative Learning Creation Studies at the Graduate School of Frontier Sciences, pursues research on learning mechanisms.

“Learning mechanism study has been traditionally pursued in pedagogy. What is new about my research is the approach I am developing. I use measuring machines and VR technology,” Hachisuka explains.

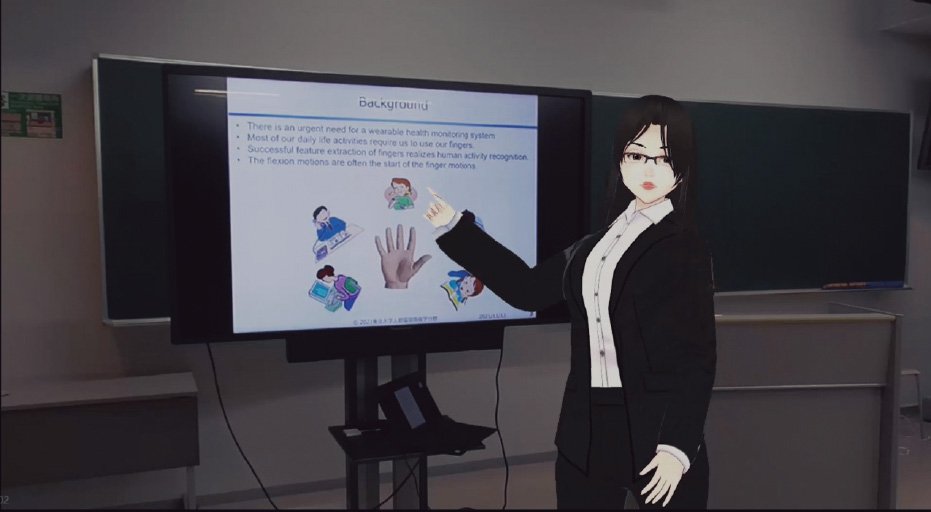

Precisely, her goal is to customize human teachers’ lessons to fit each student’s characteristics, including their ability, curiosity, visual attention, etc., to enhance their learning motivation and effectiveness. For example, she created various teacher avatars to teach a lesson in diverse ways to realize customized teachings.

Moreover, her other research would possibly use haptics and the VR technology for practicing handwriting, which primary school pupils start to learn in lower grade. Usually, students repeatedly practice modeling the textbook, but Hachisuka aims to support learning by virtual sounds so that children can move their hands naturally to write letters correctly. Higher effects can be expected if haptic pursuit* is combined with this approach.

*Haptic pursuit: Eye movement that follows a moving object is called “pursuit” or “ocular-following response.” It was validated that the hand follows a slow-moving haptic stimulus generated by ultrasound.

A lesson taught by an avatar teacher.

HACHISUKA Satori

Project Lecturer

Department of Human & Engineered Environmental Studies

POSSIBILITIES

- When learning calligraphy, a hand automatically moves by hearing and tactile stimuli and children can get the knack of writing easily.

- Students who are not attending school can also receive the same quality.

5. The Future of VR Research at the GSFS

If haptics is implemented for practical use in the near future, it will significantly impact the world. However, according to Professor Shinoda, many challenges remain in haptics development. “The most difficult part is the improvement of devices. To make a lighter and thinner AUTD with high-power capacity, knowledge of materials, such as piezoelectric materials, including high-performance polymers or organic semiconductors, and their production techniques are required. Additionally, the utilization of AI, especially machine learning, is highly significant. Haptics will be applied to education and urban design a great deal in the future. Regardless of academic specialties, I am eager to collaborate with researchers in various fields,” Shinoda speaks. This free and flexible collaboration research is the advantage of the Graduate School of Frontier Sciences, because of which we can expect further advancement in VR research at the school.

After all, VR research aims to support humans’ life and activities and create new connections among people and society via virtual reality. One day when everyone can use VR devices naturally and easily, like the Internet, VR can contribute to people’s wellbeing and society’s affluence.

vol.40

- Cover

- Feature Article: The Forefront of VR - POSSIBILITY OF HAPTICS

- Material Science: The Driver of Electron Device Evolution

- Floating Platform for Sustainable System

- GSFS FRONTRUNNERS

- Voices from International Students

- ON CAMPUS x OFF CAMPUS

- EVENT & TOPICS

- Awards

- INFORMATION

- Relay Essay: School in Switzerland